Data Attributions: The Silent Architects Shaping Trust, Credibility, and Accountability in AI

Data Attributions: The Silent Architects Shaping Trust, Credibility, and Accountability in AI

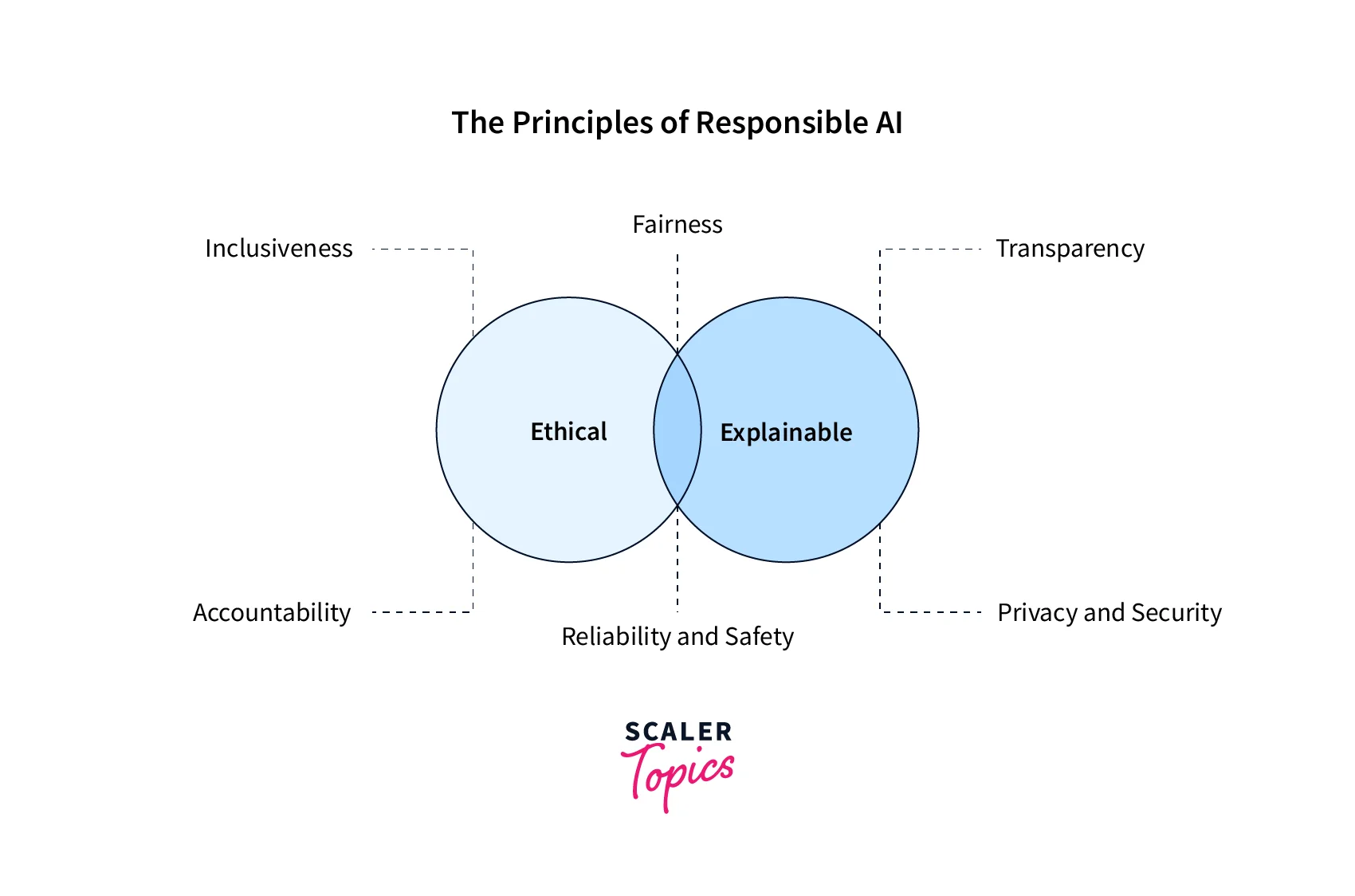

pioneers in the age of artificial intelligence increasingly rely not just on sophisticated algorithms, but on a critical yet often overlooked component: data attributions. As AI systems permeate healthcare, finance, media, and governance, understanding where training data originates—and who or what contributes it—has become essential for transparency, ethics, and legal compliance. Data attributions serve as digital fingerprints tracing the provenance of machine learning inputs, offering a structured narrative of origin, ownership, and use.

In a world where AI decisions can shape lives, the attribution of data is no longer a technical footnote—it is a cornerstone of responsible innovation. The concept of data attribution merges principles from data lineage, metadata management, and intellectual property law into a unified framework. At its core, data attribution documents the journey of information from its source—whether public datasets, licensed content, or user-generated material—through ingestion, processing, and model training.

This chain ensures that every piece of data used in an AI system carries an identifiable history, enabling stakeholders to verify legitimacy, assess bias, and uphold accountability.

Why Data Attribution Matters in Trustworthy AI Systems

Data attribution is the backbone of trust in machine learning. Without it, AI models risk operating as “black boxes,” where inputs and outputs remain opaque and unverifiable.This erodes public confidence—and risks cascading failures in high-stakes applications. As Jonathan White, a senior fellow at Stanford’s Human-Centered AI Initiative, explains: “Without clear attribution, even the most accurate model becomes untrustworthy in the eyes of regulators, users, and the public.” Trust hinges not just on performance, but on transparency. Regulatory Compliance Codified Governments and international bodies now treat data attribution as a legal imperative.

The European Union’s Artificial Intelligence Act mandates rigorous documentation of data sources for high-risk AI systems, requiring organizations to prove compliance through detailed provenance trails. Similarly, the U.S. National Institute of Standards and Technology (NIST) frameworks emphasize metadata integrity as a prerequisite for safe AI deployment.

Attributions enable auditors to trace datasets back to original contributors, verify licensing agreements, and ensure no unpermitted use of copyrighted or sensitive material. Bias Detection and Algorithmic Fairness Data attribution empowers organizations to identify and mitigate bias at its source. When training data originates are clearly documented, teams can analyze demographic, geographic, or contextual imbalances that might otherwise skew model behavior.

For instance, facial recognition systems historically performed poorly on underrepresented groups due to skewed datasets—an issue now legally and ethically scrutinized partly because of better attribution practices. By tracing data lineage, developers recognize patterns of exclusivity or overrepresentation, enabling corrective adjustments before deployment.

Technical Foundations: How Data Attribution Works Beneath the Surface

Behind the high-level concept lies a robust technical infrastructure grounded in metadata standards, digital signatures, and active data governance.- Metadata Enrichment: Each dataset point is tagged with structured metadata—source identifiers, licensing terms, collection timestamps, and processing history. Standards like the DCAT (Data Catalog Vocabulary) or schema.org enable interoperable attribution across platforms. - Digital Provenance Tracking: Blockchain-inspired immutable logs and cryptographic hashing verify data integrity and prevent tampering.

Every transformation—cleaning, augmentation, merging—is timestamped and linked to responsible parties, creating an unbroken chain of custody. - Access and Usage Auditing: Attribution systems integrate with data governance tools to track who accessed or modified data, when, and for what purpose. This real-time visibility supports compliance reporting and internal accountability.

- Attribution Standards and Frameworks: Industry-wide initiatives like the Partnership on AI’s Transparency Framework and ISO/IEC’s emerging data governance norms provide blueprints for consistent attribution practices.

Attacking data origins with precision transformed model trust from an aspiration into a measurable outcome.

Legacy systems lack built-in metadata layers, requiring costly retrofits. Moreover, balancing transparency with privacy—especially when source data involves personal information—demands careful anonymization techniques to avoid re-identification risks.

Real-World Applications: From Finance to Journalism

In finance, banks deploy data attributions to validate risk models trained on market datasets, ensuring sources comply with SEC regulations and insider trading rules.JPMorgan Chase, for instance, uses attribution tools to trace alternative data from social feeds and transaction logs, mitigating legal exposure and enhancing model explainability. Newsrooms and media outlets increasingly apply attribution principles to safeguard AI-generated content. When automated journalism tools draft stories or curate content, tracking the origin of source material—from press releases to public archives—protects intellectual property and prevents misinformation.

The Associated Press, through its AI lab, now embeds attribution records into every story model, allowing editors to verify sourcing before publication.

Emerging standards aim to unify these practices globally, reducing compliance burdens and fostering cross-border trust.

In the quiet work of tracing data origins, organizations build not just better models, but greater public confidence. In the age of intelligent machines, the truth behind the data defines both the technology and the trust it enables.

Related Post

Unlocking Sfoth Meaning: The Key to Authentic Interpretation Across Text and Thought

Time in Michigan Now: Real-Time Clocks Shaping Life Across Time Zones in the Great Lake State

Texas Longhorns Kickoff Set for 7:30 PM Today — Gridiron Evolves Under the Texas Sky

Always Ready FC: The Unshakable Powerhouse of Competitive Football