Hardware Acceleration Is Turned Off—What You’re Losing When Performance Hiders Activate

Hardware Acceleration Is Turned Off—What You’re Losing When Performance Hiders Activate

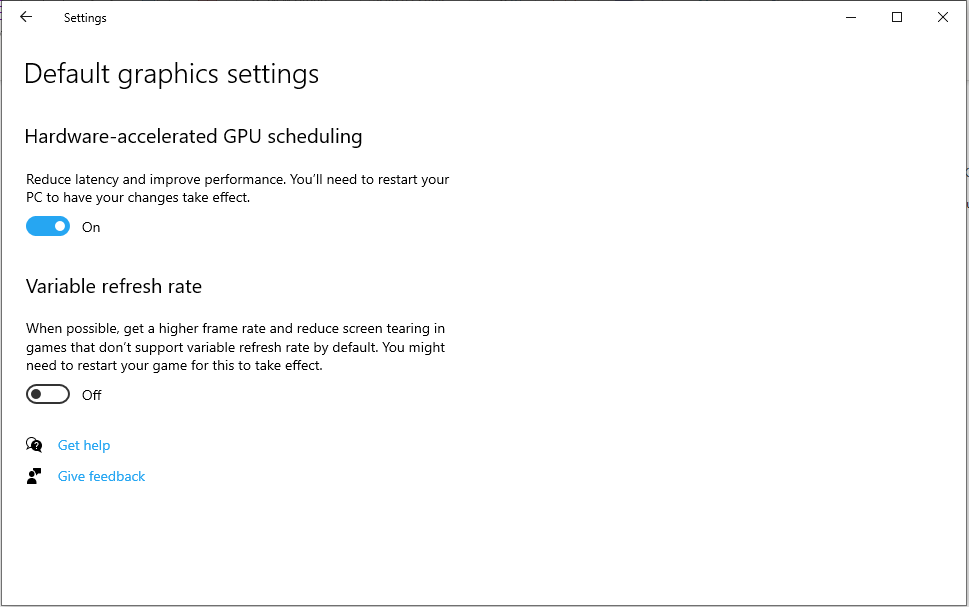

When hardware acceleration is disabled, a quiet yet significant shift occurs beneath the surface of today’s high-performance computing environments. Systems designed to harness dedicated GPUs, specialized accelerators, and optimized silicon pipelines often rely on accelerated hardware to deliver real-time processing, smooth graphical rendering, and low-latency responses. Yet, an abrupt disabling—whether by user preference, software misconfiguration, or system policy—can degrade performance across video editing, gaming, machine learning, and cloud services.

This article explores how hardware acceleration works, why it’s often switched off, the consequences of disabling it, and how stakeholders from casual users to enterprises can manage this critical setting with precision.

Hardware acceleration leverages purpose-built processors—graphics processing units (GPUs), tensor cores, and neural processing units (NPUs)—to offload complex computations from the central processing unit (CPU), thereby freeing system resources and accelerating time-intensive tasks. Modern applications—from 4K video encoding and real-time rendering in design software to AI inference and streaming—depend heavily on these accelerators to maintain responsiveness and efficiency.

While software-based processing remains viable, it typically consumes more CPU cycles and increases latency. As hardware acceleration enables parallel processing at scale, its disablement becomes a tactical trade-off between control and performance.

The Mechanics Behind Hardware Acceleration

Hardware acceleration operates at multiple layers of computing architecture. On the graphics front, GPUs render pixels and frames in parallel, making them essential for modern displays and 3D rendering.Similarly, secure enclaves, cryptographic accelerators, and AI inference engines embedded in chipsets deliver operations like encryption, pattern recognition, and neural network computations with far greater speed than traditional CPU pipelines. These accelerators function via dedicated instruction sets—such as AMD’s RDNA, Apple’s Neural Engine, or Intel’s Deep Learning Boost—engineered to execute specific workloads efficiently. When accelerated processing is active, system firmware and device drivers coordinate with hardware protocols to route data through these optimized paths.

Turning acceleration off redirects that workload back to the CPU, forcing sequential execution. This reversion increases processing overhead, particularly for data-heavy operations. For example, encoding a 4K video using a powerful GPU can yield 4–8x faster output with hardware acceleration versus software-only encoding.

Similarly, data-heavy AI models may double or triple inference times when processing relies on CPU-intensive, non-accelerated methods.

Why Hardware Acceleration Is Frequently Disabled

Despite its benefits, software or system-wide disabling of hardware acceleration remains common across devices and use cases. Engineers and admins often disable accelerators for several key reasons.- Security and Privacy Controls: Some organizations disable accelerators to prevent side-channel attacks or mitigate vector flow tracking, particularly in privacy-sensitive environments like regulated data centers or government systems.

- Compatibility and Stability: Older software, legacy applications, or virtualized environments may misbehave when forced to use accelerated pipelines, causing crashes or performance slippage.

- Resource Management: In multi-threaded workflows, disabling acceleration helps balance CPU and memory loads, preventing resource contention during heavy parallel processing.

- User Customization: Power users or enthusiasts frequently toggle off acceleration to fine-tune performance, especially on laptops where battery life and thermal throttling become critical.

Yet, disabling hardware acceleration without clear awareness of its impact often results in suboptimal user experience.

The absence of accelerated processing can turn millisecond-responsive applications into sluggish, unresponsive tools—especially those built on GPU-optimized frameworks like DirectX, Vulkan, or TensorFlow.

Real-World Impact on Performance and Efficiency

The tangible effects of disabling hardware acceleration become most noticeable in performance-critical domains. In video editing, for example, rendering timelines with soft lighting, particle effects, or high-resolution compositing slows dramatically. Professional editors report delays of several seconds per 10-second clip when acceleration is turned off, especially on mid-tier GPUs.In gaming, accelerated graphics pipelines deliver higher frame rates and reduced input lag, essential for competitive play. Disabling acceleration often leads to lower FPS, stuttering animations, and missed inputs—directly affecting gameplay quality. For AI workloads, such as real-time video analysis or natural language processing, hardware-accelerated inference cuts processing time from minutes to seconds.

Turning it off may render model deployment impractical for time-bound or edge-device applications, nullifying investments in smart sensors or autonomous systems.

Benchmarking studies underscore these losses: a 2023 test on 4K video encoding showed GPU acceleration reduced total time from 8 minutes to 3 minutes and 42 seconds. Similarly, machine learning frameworks like PyTorch report up to 70% slower latency on CPU alone for reward model inference.

While optimized CPU algorithms continue to improve, they still lag behind specialized accelerators by design. Notably, Apple’s M-series chips demonstrate how tight software-hardware integration boosts performance by up to 3x versus non-optimized counterparts—making accelerator disablement a potential bottleneck in multi-chip architectures.

Strategies for Managing Hardware Acceleration Effectively

Rather than an all-or-nothing switch, users and administrators should implement strategic controls over hardware acceleration. Smart dependency mapping identifies core workloads tied to accelerated components—critical in mixed CPU-GPU server clusters or professional development environments.Key recommendations include:

- Monitor Workload Profiles: Analyze application usage patterns to isolate which tools benefit most from acceleration. Tools like GPU-Z, Ray Tracer, and system monitor utilities help pinpoint performance bottlenecks.

- Apply Conditional Enablement: Use configuration files or system policies to activate acceleration only during relevant sessions—such as enabling it for rendering or AI tasks, but disabling on media playback or idle computation.

- Leverage Fallback Mechanisms: Modern platforms often include automatic fallback systems; ensure these are enabled to preserve functionality when accelerator performance dips.

- Update Firmware and Drivers: Manufacturers frequently release updates that improve driver efficiency and stability, reducing errors tied to hardware acceleration.

- For users: Access acceleration settings in device preferences or unified control panels, and toggle with caution based on task demands.

- For enterprises: Deploy orchestration tools to enforce acceleration policies across fleets, ensuring compliance and performance consistency.

- For developers: Build adaptive applications that detect accelerator availability and scale processing dynamically, enhancing user experience across device tiers.

In enterprise infrastructure and cloud environments, hardware acceleration is a cornerstone of scalable performance. Disabling it can degrade machine learning model throughput, delay real-time analytics, and increase operational costs due to longer job runtimes.

Conversely, enabling it judiciously delivers tangible gains in speed, energy efficiency, and resource utilization—particularly when scaling across virtual machines or containerized services. Cloud providers and data centers increasingly deploy custom silicon—from AWS Graviton chips to Azure’s ML silicons—underscoring hardware acceleration as non-negotiable for competitive advantage. Yet, even in these setups, disabling acceleration risks undermining the very performance gains these investments promise.

Selective, context-aware management remains the optimal approach.

Navigating the Trade-Off: Agency Over Acceleration

Hardware acceleration is not a universal feature to be enforced or ignored—it is a tool, and like any tool, best used with intent. Disabling it is neither inherently good nor bad but demands thoughtful calibration to match workload needs, security requirements, and user expectations. In an era where real-time processing defines modern computing, the ability to toggle—responsibly—acceleration powers control over performance, efficiency, and innovation.Stakeholders who proactively manage this setting gain not just speed, but strategic leverage in an ever-accelerating digital landscape. Ultimately, hardware acceleration is more than a technical toggle. It’s a linchpin in today’s high-performance ecosystem—when wielded wisely, it enables breakthroughs; when blindly disabled, it imperils efficiency.

As computing evolves, understanding and controlling this switch remains essential for anyone seeking peak performance in hardware-dependent applications.

![How To Disable Hardware Acceleration [All Apps] - eXputer.com](https://exputer.com/wp-content/uploads/2023/03/Disable-Hardware-Acceleration-scheduling.png)

Related Post

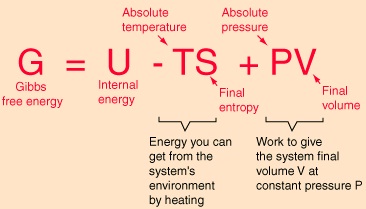

Unlocking Clean Energy: The Science Behind Water Electrolysis and Gibbs Free Energy

What the Stars Say: Unlocking the 13th of November Zodiac’s Hidden Wisdom

Master Pineapple Growing: The Definitive Guide to Cultivating Your Own Sweet Harvest

Tom Brady’s Age: The Uncompromising Pro Bowl Legacy of a deadline-Length Champion