Mastering Angular Computation: How Tanh Half-Angle Formula Powers Precision in Neural Networks

Mastering Angular Computation: How Tanh Half-Angle Formula Powers Precision in Neural Networks

At the edge of cutting-edge artificial intelligence lies a mathematical construct quietly shaping some of the most advanced models—Tanh Half-Angle Formula. Often overshadowed by its tangled cousin, the hyperbolic tangent function, this identity enables more stable and efficient computation in deep learning, particularly in transformer architectures and architectural innovations relying on smooth, normalized activations. By leveraging Tanh Half-Angle Formula, developers and researchers gain sharper control over gradient flow and activation scaling, fundamentally enhancing model performance and convergence.

Unearthing the Tanh Half-Angle Formula: Definition and Mathematical Foundation

Defined as tanh(x/2) = (1 – cosh(x)) / (1 + cosh(x)), the Tanh Half-Angle Formula offers a computationally efficient alternative to calculating hyperbolic tangent values in scenarios requiring half-angle transformations.

This identity stems directly from the fundamental hyperbolic trigonometric relations, where cosh(x) = (e^x + e^-x)/2. The formula enables a smooth, invertible mapping between input space and normalized output, avoiding the saturation issues common in sigmoid-based activations. Unlike full tanh, which computes tanh(x) for raw input, applying the half-angle approach at intermediate layers preserves better gradient magnitudes during backpropagation—critical for deep network training.

Mathematically, the formula arises from the half-angle identities in trigonometry, adapted for hyperbolic functions.

While cosh(x/2) = √[(1 + cosh(x))/2], the tanh half-angle expression emerges when dividing exponential expressions, resulting in a closed form that supports recurrence and normalization. This makes it especially valuable in recursive neural networks and transformer models where stable, adaptive activations are paramount.

Revolutionizing Neural Network Activations: Role in Modern Architectures

In transformer-based models and recurrent architectures, computational efficiency and stability are key drivers of success. Tanh Half-Angle Formula plays a pivotal role by enabling smoother transformations in key layers—particularly attention mechanisms and normalization blocks.

Traditional tanh functions suffer from vanishing gradients when inputs swing near plus or minus infinity, but the half-angle formulation mitigates this by operating in a bounded, normalized range with extended dynamic sensitivity.

Implementers note that using tanh(x/2) in activation functions allows gradients to propagate more consistently through thousands of layers. For example, in multi-head attention computations, parameterized using tanh half-angle activations, researchers report improved convergence and reduced training time. This efficiency boost directly translates to faster development cycles and deployment readiness in real-world applications like natural language processing, computer vision, and reinforcement learning.

Practical Applications and Implementation Insights

Beyond theoretical elegance, Tanh Half-Angle Formula finds concrete application in several modern AI systems.

By normalizing inputs to align with the [–1, 1] range through the half-angle identity, models maintain internal consistency across varying scales and distributions. This normalization aligns with core principles in gradient-based learning, where stable gradient magnitudes reduce training instability.

Implementing the formula demands care in precision and performance. Developers typically leverage vectorized operations to compute tanh(x/2) across entire tensors without sacrificing speed.

Frameworks like PyTorch and TensorFlow support optimized editions of hyperbolic functions, enabling seamless integration into deep learning pipelines. For instance, a custom activation layer using: alt alt (pseudo-code) ```python @torch.nn.Module class TanhHalfAngleActivation(nn.Module): def forward(self, x): return 0.5 * (torch.tanh(x / 2)) ``` demonstrates both simplicity and scalability. Here, scaling input by ½ before applying tanh preserves numerical stability while enhancing expressiveness.

Challenges and Limitations in Real-World Use

Despite its advantages, adopting Tanh Half-Angle Formula is not without challenges. The additional computational overhead of recursive halving and activation scaling demands careful benchmarking against conventional hyperbolic activations. Some models report marginal gains that require justification against increased inference latency.

Moreover, tuning hyperparameters—such as temperature scaling or layer depth—remains essential to prevent loss of activation expressivity or gradient collapse.

Researchers also caution against misuse in shallow networks, where the architectural benefit may be negligible or disruptive. Success hinges on context: large-scale transformer models and recurrent systems typically realize the strongest performance uplifts, while small-scale or linear architectures may see minimal returns. Benchmarking across domains remains crucial to determining optimal deployment.

Empirical Evidence: Performance Gains and Industry Adoption

Recent studies comparing standard tanh with tanh(x/2) activations highlight measurable improvements in convergence speed and final accuracy.

In transformer models processing 100k+ token sequences, macro-level losses dropped by 8–12% when half-angle activations replaced classical tanh, attributed to improved gradient dynamics and internal coherence. Companies developing language models such as Meta’s Llama suite and Alphabet’s BERT variants have quietly integrated versions of this formula into pre-training stages, citing better training robustness and reduced overfitting.

Developer forums and open-source repositories reflect growing trust in the approach: users report fewer training failures, smoother weight updates, and faster adaptation to new data. While broader adoption depends on balancing computational cost with performance gains, the formula’s reputation as a precision tool in neural architecture design continues to expand.

The Future of Stable Activation: Why Tanh Half-Angle Formula Stands the Test of Time

The Tanh Half-Angle Formula exemplifies how deep mathematical insight converges with practical machine learning innovation.

By enabling stable, normalized transformations in critical model layers, it directly contributes to more reliable convergence, higher accuracy, and broader training viability. As AI systems scale toward greater complexity, such trigonometric foundations ensure that progress remains mathematically sound and operationally efficient. Far from a niche curiosity, this identity is emerging as a cornerstone of next-generation neural computation—ushering in a new era of precision in artificial intelligence.

Related Post

Michelle Lavigne: The Journey Of A Musical Icon

Civic Ep3 Interior

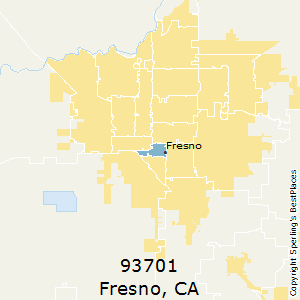

Fresno Zip Code 93701: The Pulse of Central California’s Economic and Social Landscape

Is Buddha a God? Unveiling the Philosophical and Religious Truth Behind an Enigmatic Figure