Unlocking the Future: How Flux Technology is Revolutionizing Modern Data Infrastructure

Unlocking the Future: How Flux Technology is Revolutionizing Modern Data Infrastructure

When the digital transformation wave sweeps across industries, the backbone powering scalable, real-time systems has become the linchpin of innovation—and nowhere is that clearer than in the rise of Flux architecture. Fully integrated computational frameworks built on dynamic data flow principles, Flux systems are redefining how organizations process, store, and act on information at unprecedented velocity. From fintech platforms enabling lightning-fast transactions to healthcare networks synchronizing life-critical data across continents, Flux-based infrastructures are now the invisible engine behind next-generation digital services.

At its core, a Flux system operates on interrupt-driven data streams rather than static datasets. This approach allows businesses to react instantly to emerging patterns, anomalies, or opportunities. According to Dr.

Elena Marquez, lead architect at NextaFlow Systems, “Flux doesn’t just process data—it orchestrates it. It creates a living data ecosystem where every input evolves the system’s intelligence autonomously.” This self-adapting capability is transforming sectors reliant on instantaneous action, from autonomous logistics networks to predictive maintenance in industrial IoT.

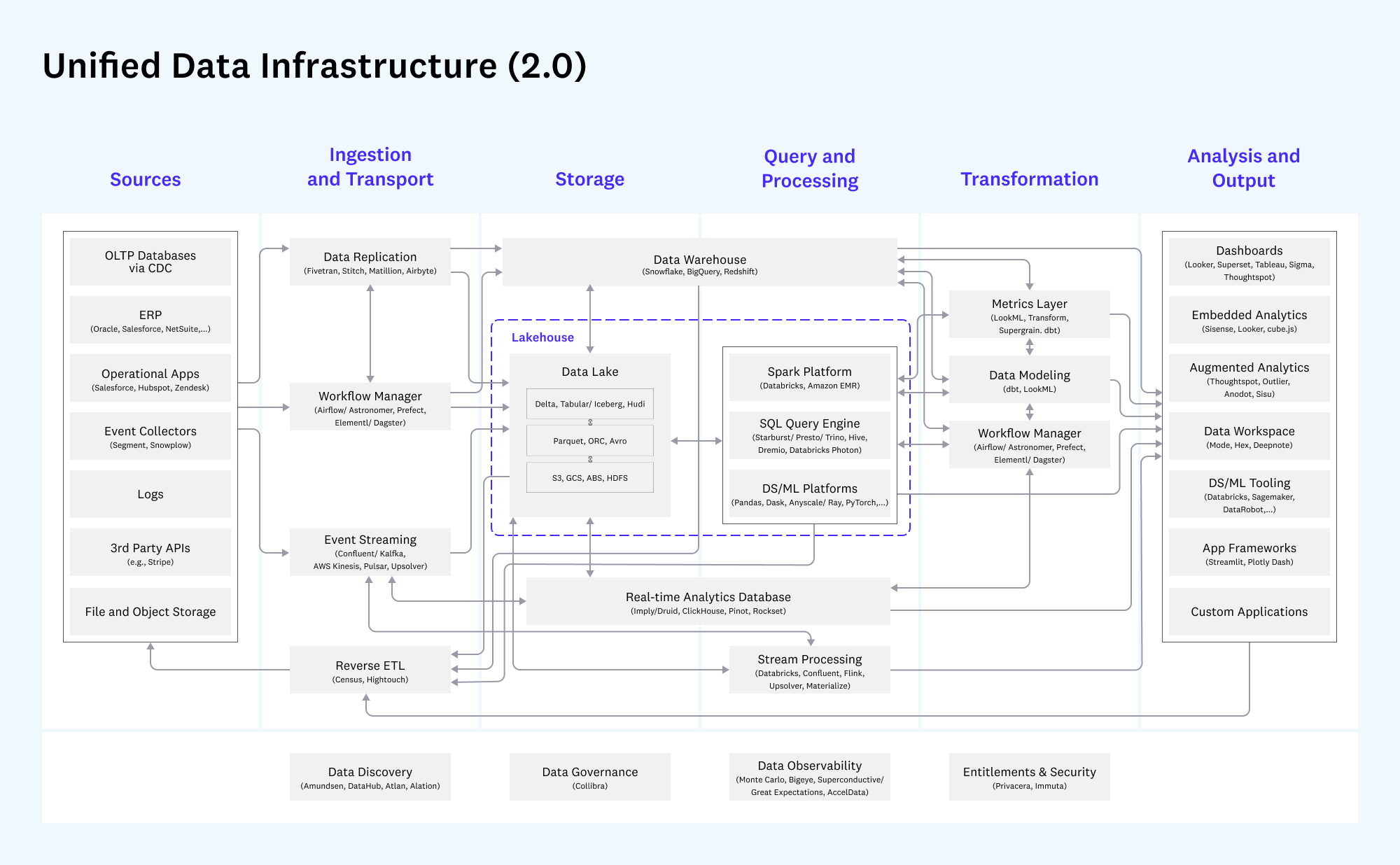

Key components defining a Flux architecture include stream processing engines, event-time handling mechanisms, scalable event stores, and real-time analytics layers.

These elements work in concert to ensure data integrity across distributed environments, maintaining low-latency updates even under massive transaction volumes. For instance, global banking platforms now leverage Flux pipelines to detect fraud within milliseconds by correlating millions of transaction events per second, drastically reducing financial risk.

What sets Flux apart from competing models—such as traditional ETL (Extract, Transform, Load) or batch data warehousing—is its embrace of continuous processing. Unlike legacy pipelines that refresh data hourly or daily, Flux systems operate 24/7 on live data streams, enabling dynamic modeling that evolves with every new input.

“Historically, businesses made decisions on snapshots of yesterday’s reality,” explains Raj Patel, a data engineering specialist at FluxTech Global. “Flux flips that script: every event triggers immediate recalibration, turning data into a living, breathing decision-making partner.”

Real-world implementations of Flux technology deliver staggering results. In the retail sector, major e-commerce players use Flux-driven demand forecasting engines that adjust inventory levels in real time, cutting stockouts by up to 40% and reducing overstock costs.

In public utilities, water management systems powered by Flux analytics anticipate pipe failures before they occur, saving millions in emergency repairs and service disruptions. These case studies underscore Flux’s role not just as a technical advance, but as a strategic asset.

Despite its advantages, adoption of Flux architecture presents clear challenges.

Legacy systems often resist integration, requiring careful middleware design or phased modernization. Skilled personnel capable of managing continuous data flows are in high demand, and ensuring data governance across rapidly evolving pipelines adds complexity. Yet industry experts argue these hurdles are outweighed by long-term gains: enhanced agility, resilience, and competitive edge.

Security and compliance are paramount in Flux environments. Given the constant data movement, robust encryption, role-based access controls, and real-time monitoring must be embedded at every layer. “We’ve broken down data into micro-shards processed in isolated streams,” notes cybersecurity lead Naomi Chen.

“This approach minimizes exposure and ensures regulatory frameworks like GDPR or HIPAA are continuously upheld—no batch afterthought.”

Looking ahead, Flux technology is converging with artificial intelligence and machine learning to forge intelligent data ecosystems. Auto-tuning pipelines, anomaly-detection models, and predictive resource allocation are becoming standard features, reducing operational overhead and amplifying system autonomy. As Asha Rao, CTO of DataForge, asserts: “Flux is evolving from a data pipeline into a cognitive infrastructure layer—one that naturally learns, adapts, and improves over time.”

In essence, Flux architecture is more than a technical upgrade—it is a paradigm shift.

By enabling seamless, real-time data orchestration, it equips organizations to navigate complexity, seize opportunity, and anticipate risk with unmatched precision. The future of scalable, responsive digital operations is no longer hypothetical; it is built, stream by stream, in real time. As businesses race to stay ahead, Flux stands as the indispensable foundation for tomorrow’s data-driven world.

Related Post

Unlocking 3D Dynamics: How SnowRider3dFullScreenGithub Transforms Browser-Based Riding Simulation

Unlock Academic Success with Santa Rosa Junior College’s Access Your Student Portal

The Rise of Nj Sportsbook Promos: Effective Betting Incentives That Drive Engagement and Winnings

Who Scored Most Points in an NBA Game? The Stats That Shocked the League